LLMs vs Google Search: Understanding Their Differences and Use Cases

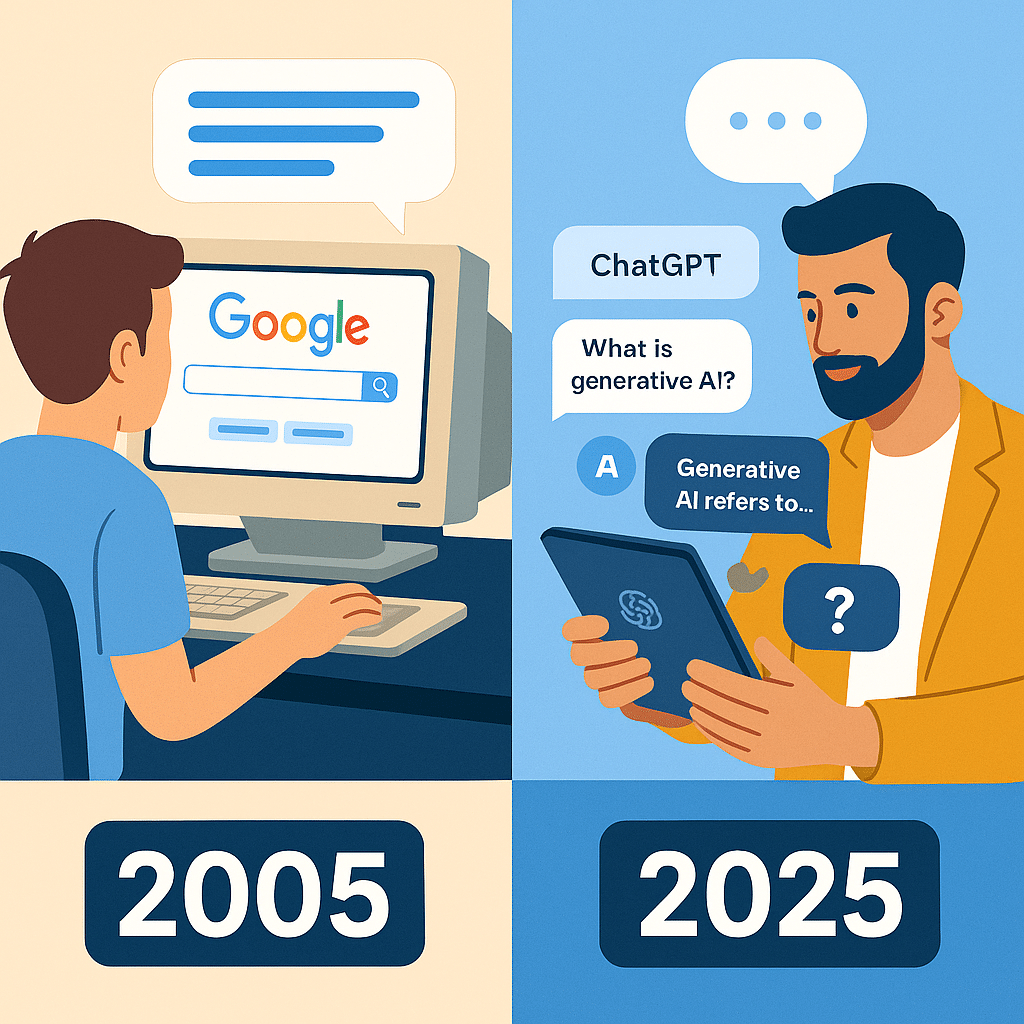

Guys, remember when you first learned Google? 🙂 It was simple: type a few words, hit search, and go down the rabbit hole of blue links. Fast forward to 2025, and we're seeing a shift that's honestly pretty nuts - Large Language Models (LLMs) like GPT‑4 are offering conversational answers, not just lists of links. These AI "word wizards" learn from vast text datasets, while Google still indexes the web, but believe me when I say this: times are changing—more people are asking "Hey ChatGPT, can you explain this?" instead of typing into a search bar.

Let's explore how these two work, where they shine (or stumble), and what that means for the future of online search. You might have doubts about which one to use, but I'll tell you why understanding both is crucial.

What Are Large Language Models?

Large language models (LLMs) are foundation models trained on vast amounts of data to understand and generate human language and other content. It's pretty amazing when you think about it.

LLMs use transformer architecture and attention mechanisms to process sequential text data and focus on relevant context. I am not an AI scientist, but this stuff works incredibly well.

They are trained on billions of pages of text to predict the next word, learning grammar, semantics, and conceptual relationships through self-supervised learning. After maybe a year or so of using these tools, I can tell you the results are impressive.

LLMs power generative AI, enabling organizations to adopt artificial intelligence across diverse business functions and use cases. Honestly, it's been life-changing for many businesses already.

Examples of LLMs include OpenAI's GPT-3/4, Meta's Llama, Google's BERT/PaLM, and IBM's Granite models. There's so much potential here that most people aren't exploiting yet.

How Search Engines Work

Search engines like Google and Bing index and retrieve relevant web pages, documents, images, videos based on user queries. You are already here using these tools daily, right?

Google isn't just a big index—it's a massive web of systems, and it's nuts how complex it really is:

- It crawls and indexes pages with one web of bots. (This alone is mind-blowing)

- Uses ranking algorithms (like the old PageRank) to sort content. I had no idea about this stuff before diving deep into SEO.

- Combines signals—keywords, backlinks, freshness—to decide what answers best. P.U.S.H. through the complexity and you'll understand it.

- Sometimes pulls quick summaries from featured snippets or Knowledge Panels. Pretty cool feature when it works.

- Drives you to other sites to verify details. This is actually the genius part.

You type keywords; Google gives you pages to explore. LLMs give you the info directly. Both have their place, and that's ok.

LLM Use Cases: Real-World Magic

The applications are endless, and I'm in a state of disbelief about what's possible 🙂

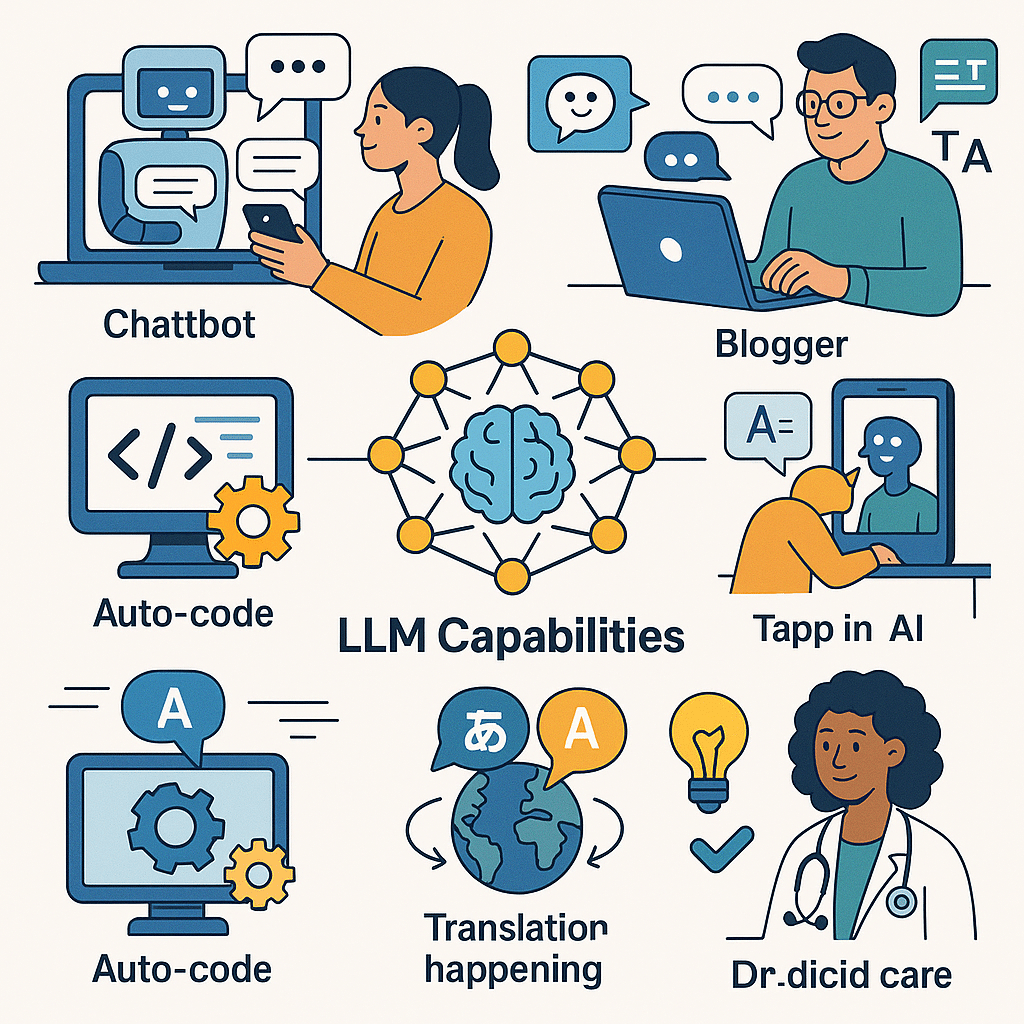

- LLMs enhance conversational AI in chatbots and virtual assistants, improving customer care with context-aware, natural language responses. I've seen businesses transform their support systems.

- They automate content generation, including blog posts, marketing materials, summarization, and research assistance. Honestly, sometimes I feel like these tools write better than I do (and I'm getting better).

- LLMs support code generation and translation between programming languages, as well as language translation and sentiment analysis. Even if you're not a coding machine, you can leverage this.

- Industries like healthcare, finance, and human resources benefit from LLMs by streamlining processes, improving accuracy, and enabling data-driven decisions. The best is yet to come.

Fine Tuning and Training

LLMs aren't one-size-fits-all, and that's actually pretty awesome:

- Fine-tuning modifies the base model with specialized data—like medical notes or financial reports. You can make these things work for your specific needs.

- Reinforcement Learning with Human Feedback (RLHF)—teaches LLMs to avoid harmful or biased replies. This stuff is crucial for responsible deployment.

That means better responses in specific fields. A legal version of GPT‑4 responds much more responsibly than a generic one. I currently rely a lot on specialized models for different tasks.

Retrieval Augmented Generation(RAG): Best of Both Worlds

Retrieval augmented generation (RAG) integrates LLMs with document retrievers to fetch relevant documents for enhanced context in output generation. This is where things get really interesting.

RAG enables LLMs to generate more accurate and informative text by leveraging external knowledge sources. After working with these systems, I believe this is the future.

This approach can be particularly useful for tasks that require a vast amount of knowledge, such as research and content creation. You may get results that combine the best of both worlds.

RAG can also help improve the efficiency and effectiveness of LLMs in generating human-like text. Don't quit on exploring this - it's game-changing.

Prompt Engineering and Optimization

LLMs respond to your prompt, and honestly, you need to learn this skill. You ask nicely, you get better answers:

- "Explain quantum computing for 5th graders." (This works way better than vague prompts)

- "Draft an email that gently pushes for a reply." (Super practical for daily work)

- "Summarize this article in bullet points." (Saves tons of time)

Better prompts = better results. Learning prompt craft is part of using LLMs well. I had to hone my skills here, and it's been worth it.

Why Data Sources and Quality Still Matters

This is crucial stuff that many people overlook 🙂

- Data sources and quality are critical factors in the performance of LLMs. Garbage in, garbage out - that's the reality.

- LLMs require vast amounts of high-quality training data to learn patterns and relationships in language. We're talking massive scale here.

- Data quality issues, such as bias and noise, can significantly impact the accuracy and reliability of LLMs. I've seen this firsthand in various projects.

- Ensuring data quality and diversity is essential for developing robust and effective LLMs. This feels a lot like building a solid foundation.

How LLMs Stack Up Versus Traditional Search Engines

LLMs and search engines serve different purposes and functions, despite some overlap in their capabilities. After almost two years of using both extensively, I can tell you they each have their place.

Search engines provide links to external sources, while LLMs generate text responses. Understanding the differences is essential for leveraging their potential effectively.

You might be thinking about which one to use for what, and that's totally normal.

Feature | Google Search | LLMs (GPT‑4, etc.) |

|---|---|---|

Response Format | Links + snippets | Direct answer, summary, or generated text |

Up-to-date Info | Yes (via crawl/index) | No (unless integrated with RAG/live data) |

Source Transparency | High (clickable sources) | Low (unless citations included) |

Conversation Mode | No | Yes – follow-up questions, clarifications |

Rich Output | No | Yes – formatting, tone, multi-turn Q&A |

Ideal For Quick Facts | Yes | Sometimes, if confidence is high |

Best for Research Links | Yes | No |

Low-barrier | Simple – type and go | Learning curve: knowing how to prompt |

Where LLMs Shine—What Google Still Wins

When LLMs Are Better:

When Google Works Best:

Interactions with LLMs and Search Engines

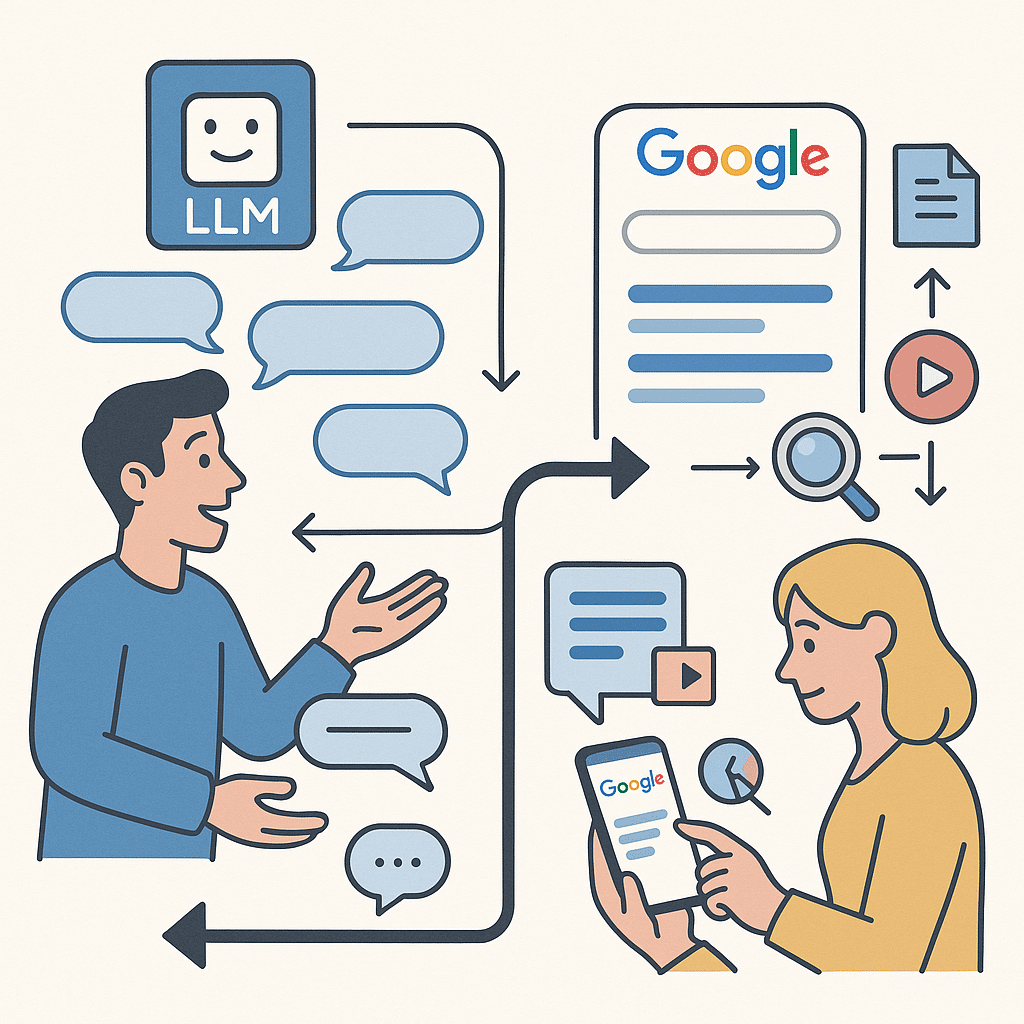

The interaction models are totally different, and once you understand this, everything clicks:

- LLMs support conversational, context-based, back-and-forth dialogue with users. It's like having a conversation with a knowledgeable friend.

- Search engines primarily offer one-way interactions by returning relevant links to queries. Classic but effective approach.

- Google and Bing now provide conversational search results powered by AI. They're adapting, which is smart.

- LLMs generate text responses, while search engines direct users to external content. Both have their place in the workflow.

Output and Results

The outputs are where things get really interesting:

- LLMs generate coherent, human-readable text and imagery, though accuracy can vary. Sometimes the results blow my mind.

- Search engines provide links to external sources for users to verify and explore information. This transparency is crucial for research.

- Google's SGE and Bing's integration of OpenAI's LLM enhance search with AI-generated answers. The hybrid approach is promising.

- GPT-4 processes text and code to deliver informative, relevant, and up-to-date search results. When it works, it's magical.

Reliability and Accuracy

LLMs:

So both require scrutiny—fact-checking remains essential.

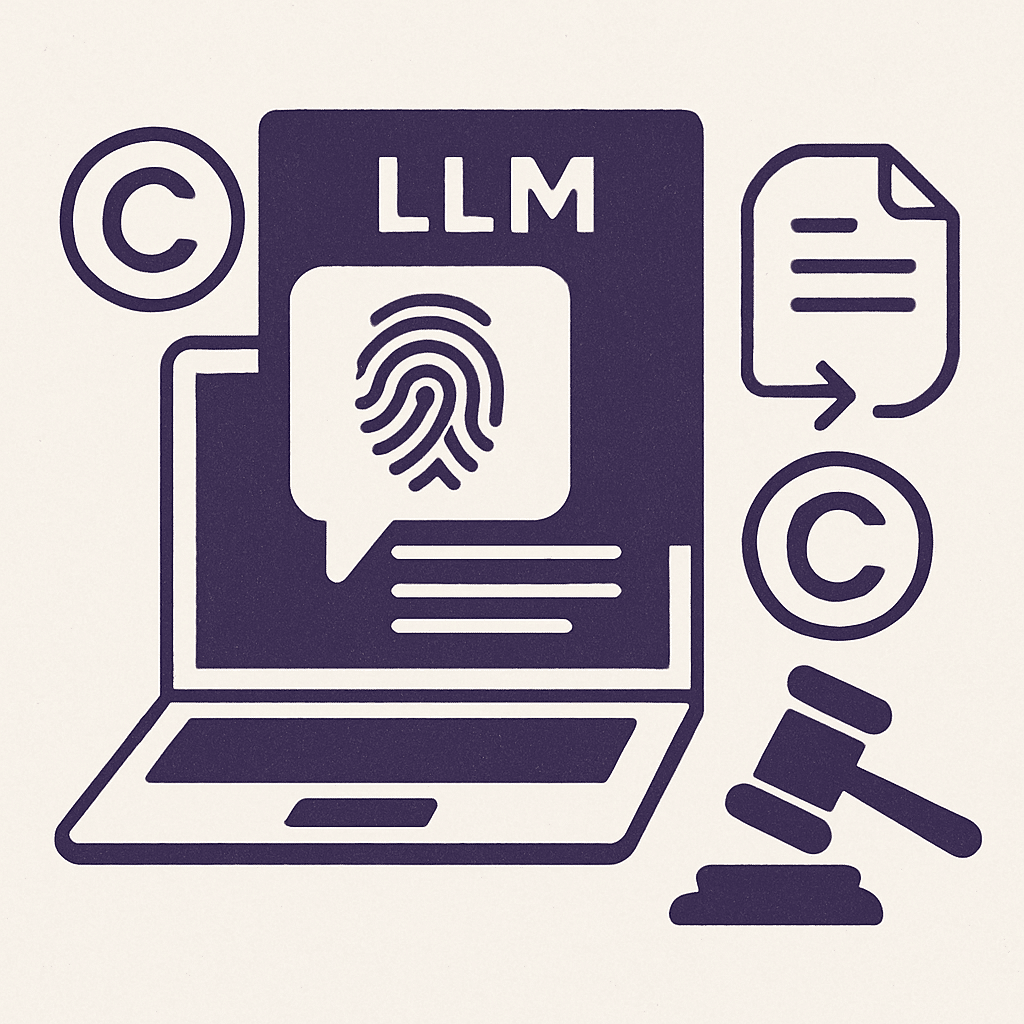

AI Detection and Copyright

LLMs sometimes copy patterns from training data, and this is getting attention:

Reproducing sentences from public sources. Not always intentional, but it happens.

Risking copyright violations. Legal landscape is still evolving.

Shielded somewhat by integrated filters, but not foolproof. Companies are working on this.

In contrast, Google links you to original sources—legally clear but still encourages deeper investigation. Honestly, both approaches have merit.

Mechanistic Interpretability

This is advanced stuff, but it's fascinating:

Mechanistic interpretability seeks to reverse-engineer LLMs by discovering symbolic algorithms approximating their inference processes. The research here is mind-blowing.

Sparse coding models and transcoders help identify interpretable features and develop replacement models for better transparency. We're getting closer to understanding these systems.

Studies show LLMs can plan ahead rather than just predict the next token, revealing deeper operational insights. This changes how we think about AI capabilities.

These techniques enhance trust and responsible deployment by improving understanding of LLM internal workings. Trust is crucial for widespread adoption.

Understanding and Intelligence

The philosophical questions are getting real:

Debate exists on whether LLMs truly understand natural language or simply predict next tokens based on training data. I go back and forth on this.

Some researchers argue LLMs exhibit emergent reasoning and problem-solving abilities akin to early artificial general intelligence. The capabilities are honestly impressive.

LLMs suffer from hallucinations, confidently generating plausible but incorrect information, challenging reliability. This keeps me humble about their limitations.

Performance is measured by perplexity and benchmarks; bias and misinformation remain concerns despite advances in fine tuning and retrieval methods. We're getting better, but there's work to do.

Governance and Regulation

Effective AI governance ensures trustworthy, transparent, responsible, and secure access to AI models and tools.

IBM emphasizes AI traceability and accountability, enabling organizations to monitor and audit AI activities, data, and model origins.

Governance practices are essential for managing AI solutions that revolutionize business operations.

The Future: A Hybrid Landscape

Instead of replacing each other, LLMs and search engines will coexist, and I believe that's the smart approach:

Use LLMs for synthesis, conversation, quick overviews. They excel at this stuff.

Use search for deep, link-based, verifiable research. Google's strength remains here.

Soon you'll ask your AI, "Hey, show sources!" and toggle to Google if needed. The integration is already happening.

Think of this as a new era of smart search+chat combo. The best is yet to come.

Key Takeaways

After this journey of exploring both technologies, here's what I want you to remember:

Different Purposes: LLMs talk back; search engines point you to info. Use each for what they do best.

Use Cases Vary: Creativity vs verification, conversation vs browsing. Context matters.

Fact-Check Always: Both can mislead—double-check your facts. P.U.S.H. through the verification process.

Ethics Matter: Bias, energy, transparency—all play roles. Stay aware of these issues.

It's Not One or the Other: Use both smartly, depending on your goal. This hybrid approach works.

Conclusion

LLMs and search engines are distinct technologies with different strengths and weaknesses. After working with both extensively, I can tell you they're both valuable in their own ways.

Understanding their differences and use cases is essential for leveraging their potential. Don't quit on learning about both - the knowledge is worth it.

As AI continues to evolve, we can expect to see more integration of LLMs and search engines, leading to improved user experiences and more efficient information retrieval. Honestly, it's an exciting time to be working with these tools.

By recognizing the capabilities and limitations of LLMs and search engines, we can harness their power to drive innovation and progress. If I can figure this stuff out coming from a non-technical background, you can too. Let's do this! 🙂