Understanding How AI Content Detectors Work

So here’s the thing: we’re living in an age where you can’t even trust your own eyes anymore. A headline? Could be human. Could be AI generated content. That essay your student handed in? Looks legit.

But wait—was it written by them, or by ChatGPT at 3 a.m. after five cans of Red Bull? Nobody knows.

That’s why AI detectors exist. They’re like bouncers at the club of the internet, trying to decide who gets in (real writers) and who gets kicked to the curb (AI generated text). Except sometimes the bouncers are drunk. Or blind. Or both.

Anyway, in this piece we’re diving deep into how AI content detectors work, why they sometimes flag Shakespeare as a bot, and what “false positives” and “false negatives” mean for your content.

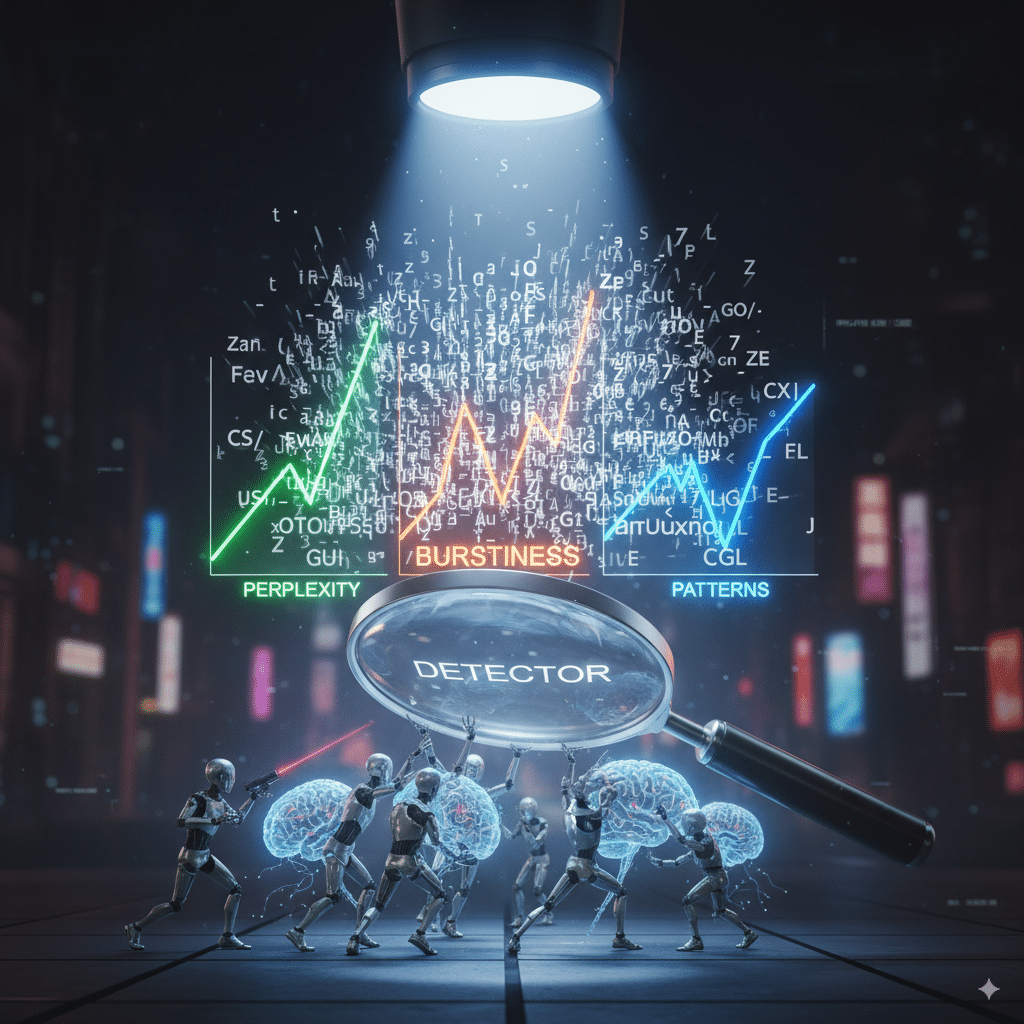

We’ll talk about the methods (perplexity, burstiness, machine learning, all the nerdy stuff), and the challenges (accuracy, bias, constant evolution of AI models).

Let’s go.

What is AI Generated Content?

Pretty obvious, but let’s set the stage. AI generated content is text (or images, or video, but we’re focusing on text here) created by AI models like GPT, Claude, Gemini, etc. You’ve seen it. Smooth, sometimes too smooth. Reads like a college essay that hits all the right points but has no soul.

But here’s the kicker: when AI is good, it’s really good. Good enough that teachers, editors, even Google Docs users are asking: how do we detect AI vs human written content?

That’s where AI detection comes in.

How AI Content Detectors Work

Okay, so how do these mysterious AI content detectors actually work?

- Pattern analysis: AI detectors look at sentence structures, text patterns, and word predictability. Humans write messily. We break rules. We ramble. AI is more… tidy. Almost too tidy.

- Perplexity: Fancy word for “how predictable is this text?” If it’s super predictable (like “The sun is hot. The sky is blue.”), detectors may scream: “Bot!” Lower perplexity = higher chance it’s AI.

- Burstiness: Humans have rhythm. We write a long sentence, then a short one. Then maybe a fragment. AI? More balanced, like a metronome. Detectors love to measure that.

- Training data: Most detectors are trained on giant datasets of human written text vs AI generated text. They learn “key differences.” At least in theory.

- Machine learning + NLP: Yep, natural language processing. Detectors are AI fighting AI. Which is kind of hilarious if you think about it.

👉 The problem? AI detectors aren’t perfect. They sometimes flag content mistakenly. You get “verification successful waiting…” messages or a false positive telling you your very real article is fake.

AI detector tools, They all brag about “highest accuracy rates,” but in practice? I’ve seen whole paragraphs of my own writing (this messy style right here) flagged as AI.

AI Detector Tools You Should Know

Let’s name-drop a few because everyone Googles these:

Side note: half these sites make you do a CAPTCHA to verify you are human before you can even check if your writing is human. The irony.

Challenges of AI Detection (aka why this stuff is messy)

Here’s where I rant.

Imagine pouring your soul into an article, only for an AI checker to tell you it’s fake. That’s the frustration thousands of writers face right now.

Why Academic Integrity is the Hot Zone

Education institutions are where this fight is loudest. Students use AI tools to “help” with essays. Professors panic. Schools install detectors. Chaos ensues.

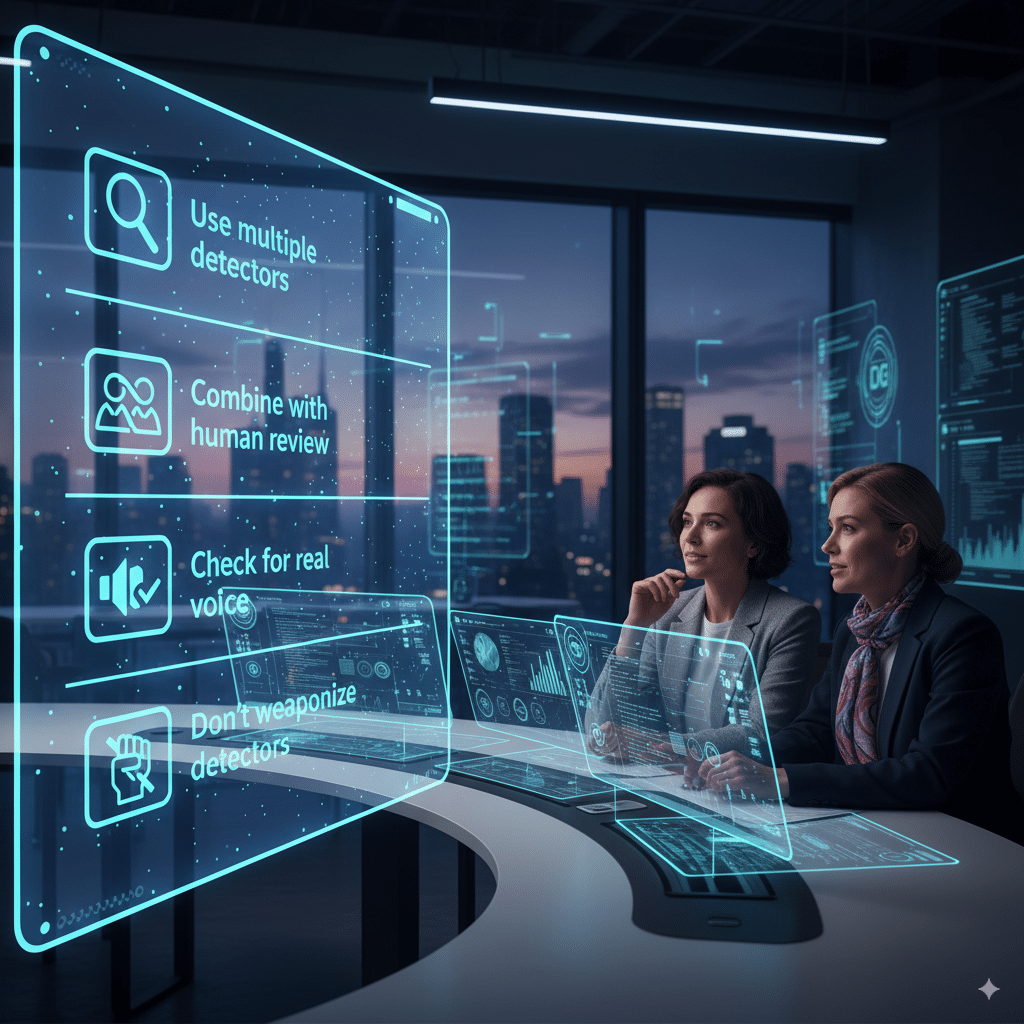

My take? Detectors should be used with human judgment, not as the final verdict.

The Role of AI Tools in All This

Let’s not villainize AI completely. AI tools are here to stay. Writers use them. Marketers use them. Even Google search itself is testing AI-generated summaries in search engine results pages (SERPs).

But the balance? Use AI responsibly. Don’t pretend AI writing = your own work. Don’t rely 100% on detectors either. It’s a cat-and-mouse game.

Best Practices for Using AI Detectors

Here’s my no-BS advice if you’re a content agency, teacher, or just paranoid about authenticity:

Quick Comparison: AI Detector Tools

Tool | Focus Area | Strengths | Weaknesses |

|---|---|---|---|

GPTZero | Education | Free option, measures burstiness & perplexity | Not always accurate on edited text |

Agencies/Marketers | Paid, integrates with SEO workflows | Subscription needed, false positives | |

Turnitin | Schools | Integrated in LMS, plagiarism + AI detection | Students hate it, flags creative text |

Copyleaks | Enterprise/LMS | API friendly, supports multiple languages | Expensive for small users |

Sapling | Customer Service | Real-time detection in chat systems | Limited scope outside customer support |

Final Thoughts

Here’s the truth: AI content detection is important, but it’s not perfect. It’ll always lag a bit behind the newest AI writing systems. Writers will always find ways to make AI text look “more human.” Detectors will keep updating. It’s an arms race.

So what do you do? Use detectors. Respect them. But don’t worship them. The real skill is still human judgment. You can’t automate common sense.

FAQs

1. How accurate are AI detectors?

Depends. Some claim 90%+ accuracy, but in real life, false positives and false negatives happen all the time.

2. Can AI detectors flag human-written content by mistake?

Yep. It happens a lot, especially with structured or formal writing.

3. Are AI detectors reliable for academic use?

They’re a tool, not gospel. Schools should combine detectors with human review.

4. What’s the difference between plagiarism checkers and AI detectors?

Plagiarism checkers compare to existing sources. AI detectors analyze patterns in text itself.

5. Can editing AI text fool detectors?

Often yes. A little human editing can reduce the “AI vibe” enough to slip past.

6. Is there a perfect AI checker?

Not yet. And maybe never. The tech evolves too fast.

7. Should marketers worry about detectors for SEO content?

Not too much. Google cares more about value than authorship. But for client trust? Yeah, it matters.